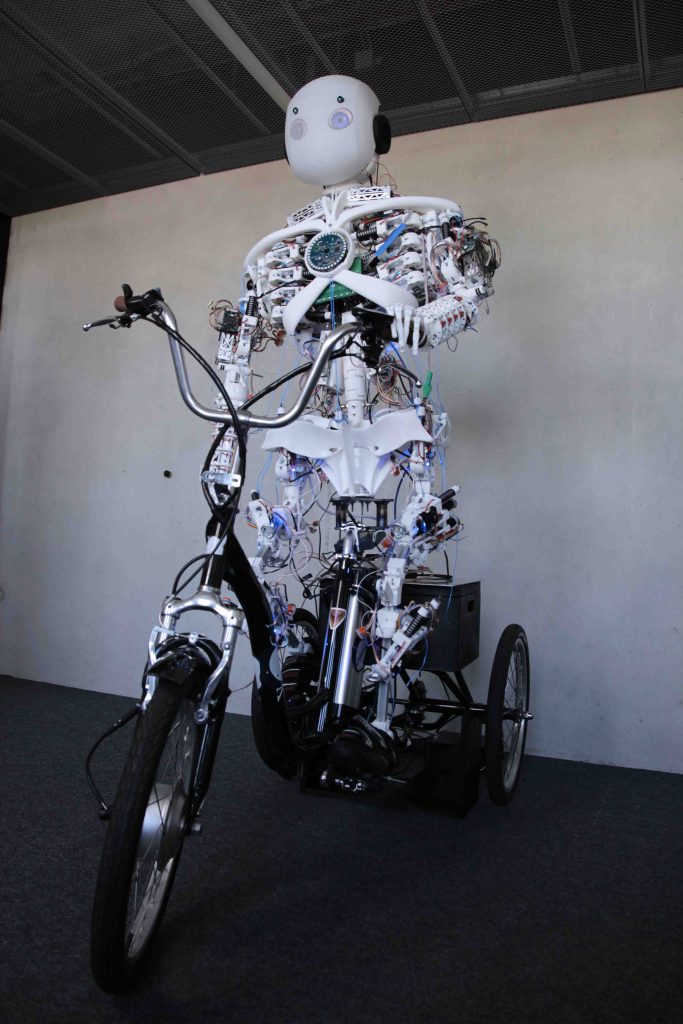

ROBOY CORE

THE STATE

OF ROBOY’S

TECHNOLOGY

roboy core

the almost-current state of roboy’s core technology

Roboy’s tech stack is fast-evolving. This page reflects snapshots of mostly complete states to give you an idea, where we were a few months back – so you know what we are working on. If you’re interested in working with us, please reach out to collaborate@roboy.org and we’ll happily give you access to our internal documentations, the CAD repository, etc. For Code, we’re live on Github – just stop by.

If you need fast support, stop by on Telegram: https://t.me/roboytech

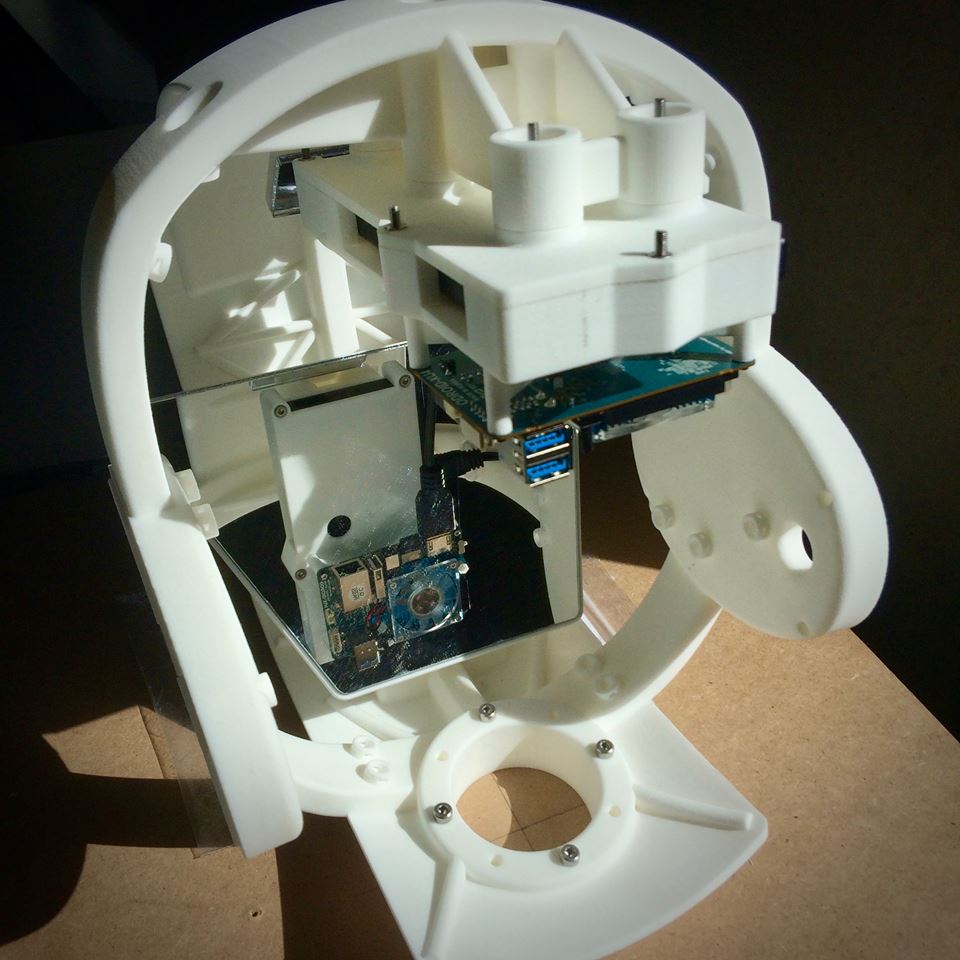

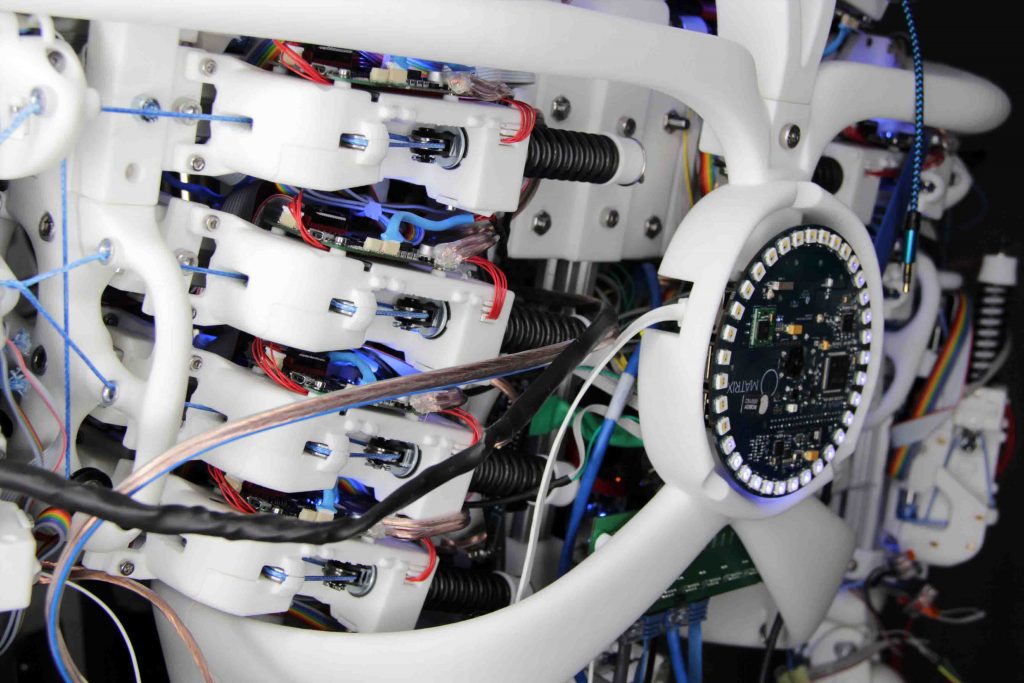

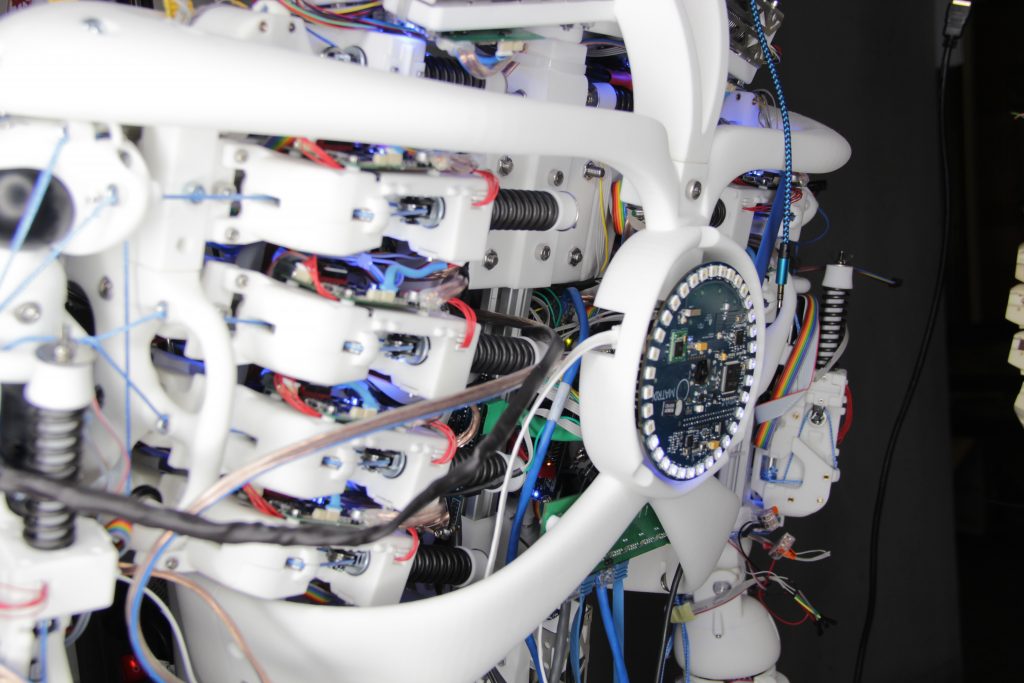

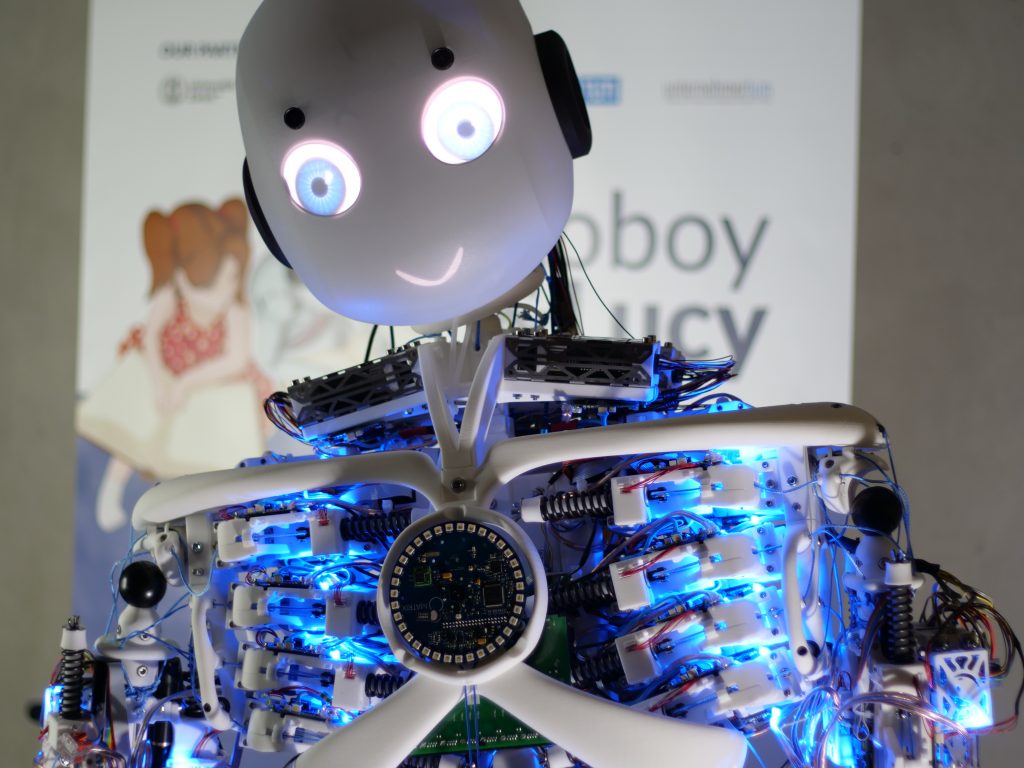

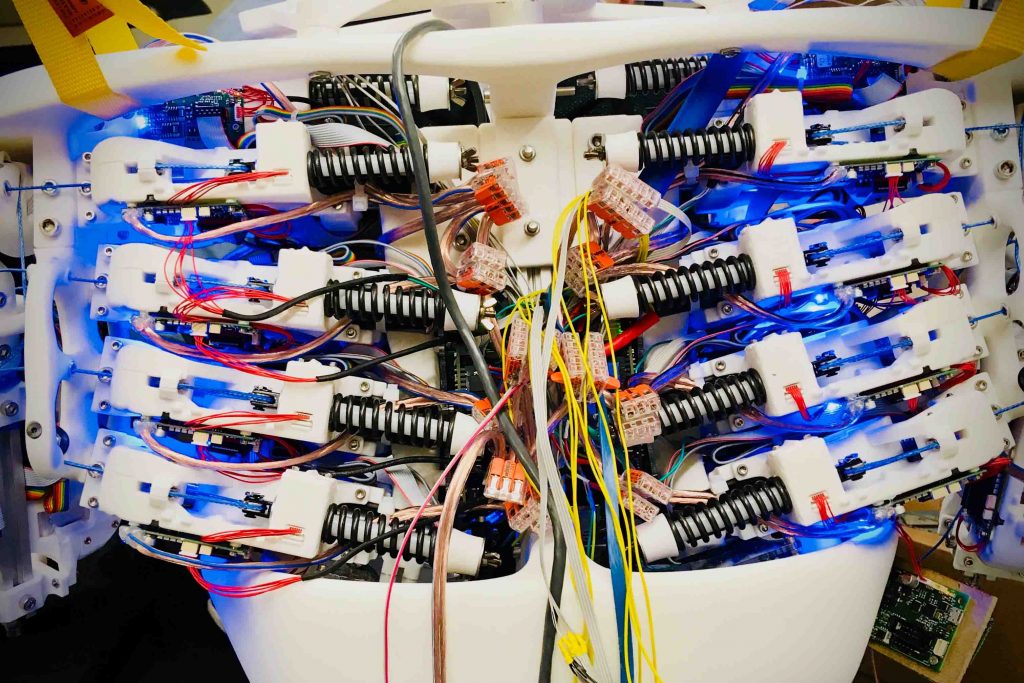

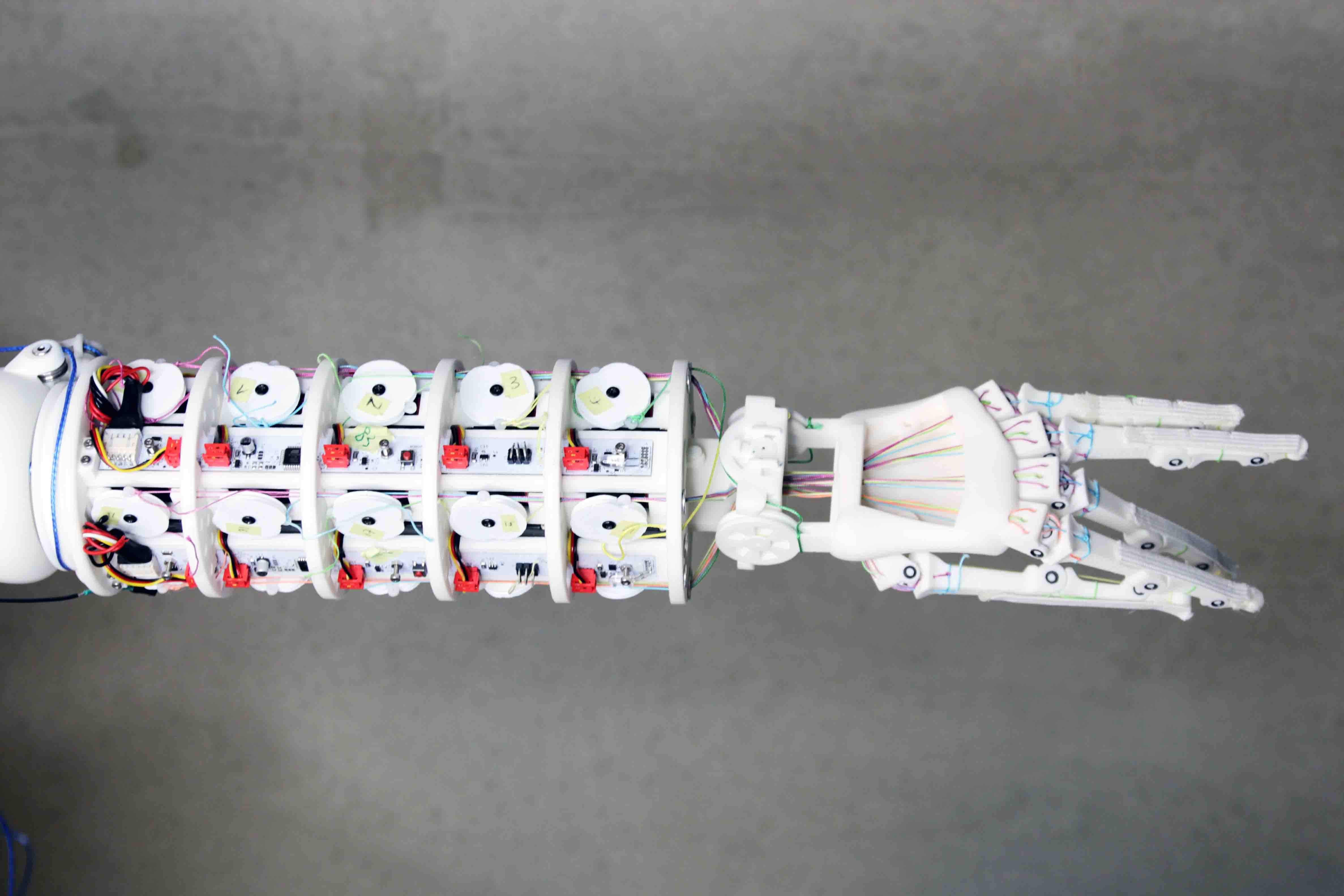

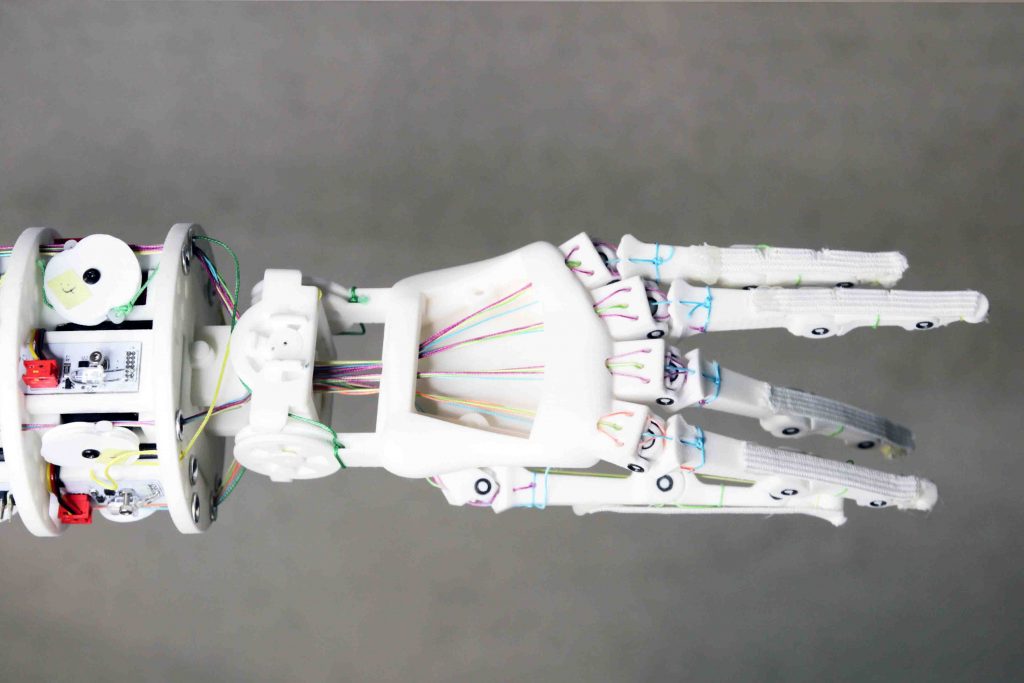

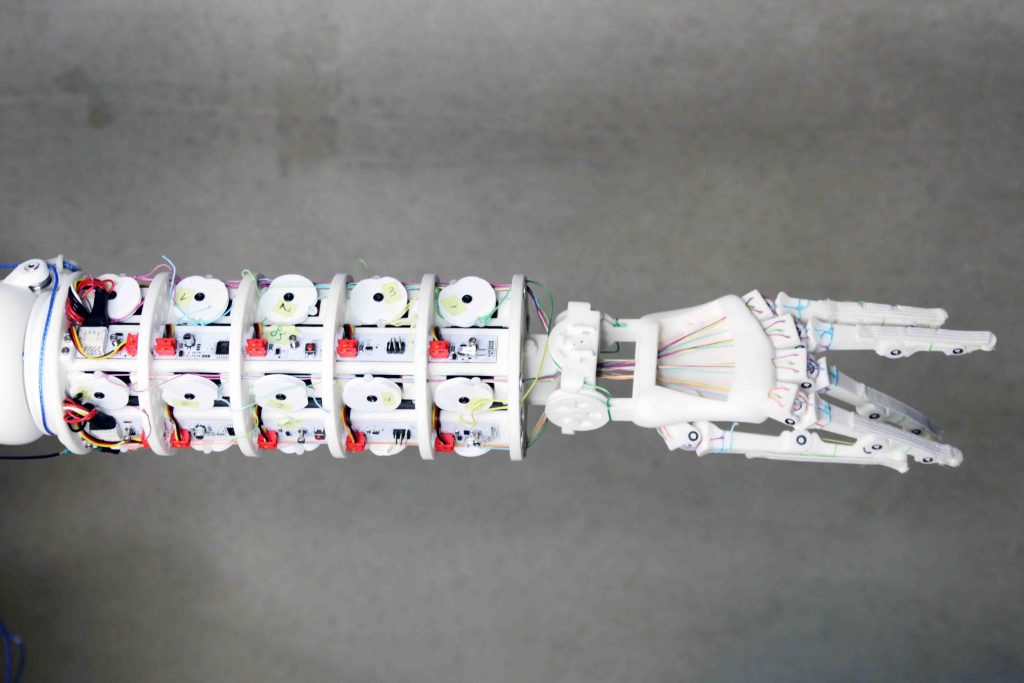

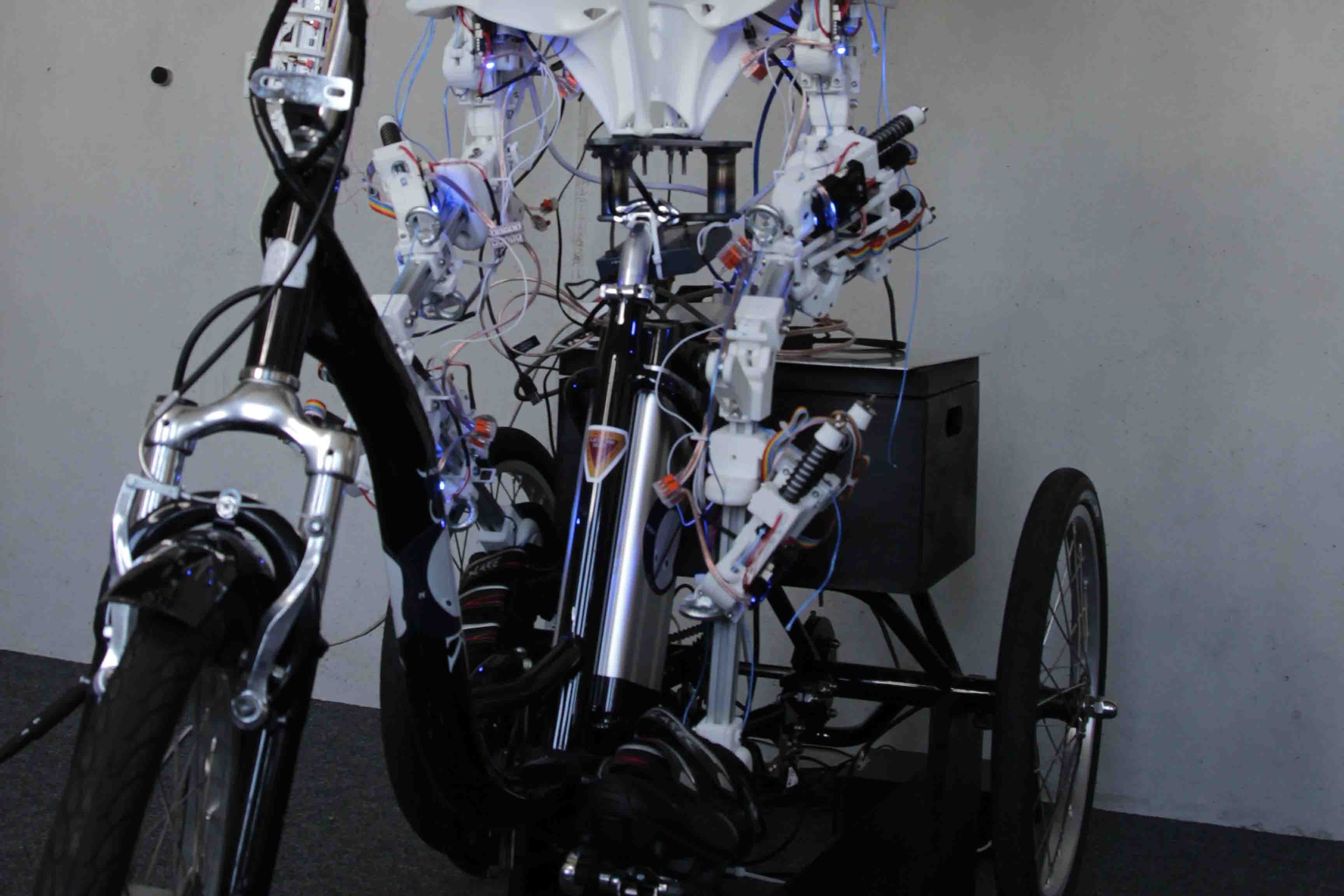

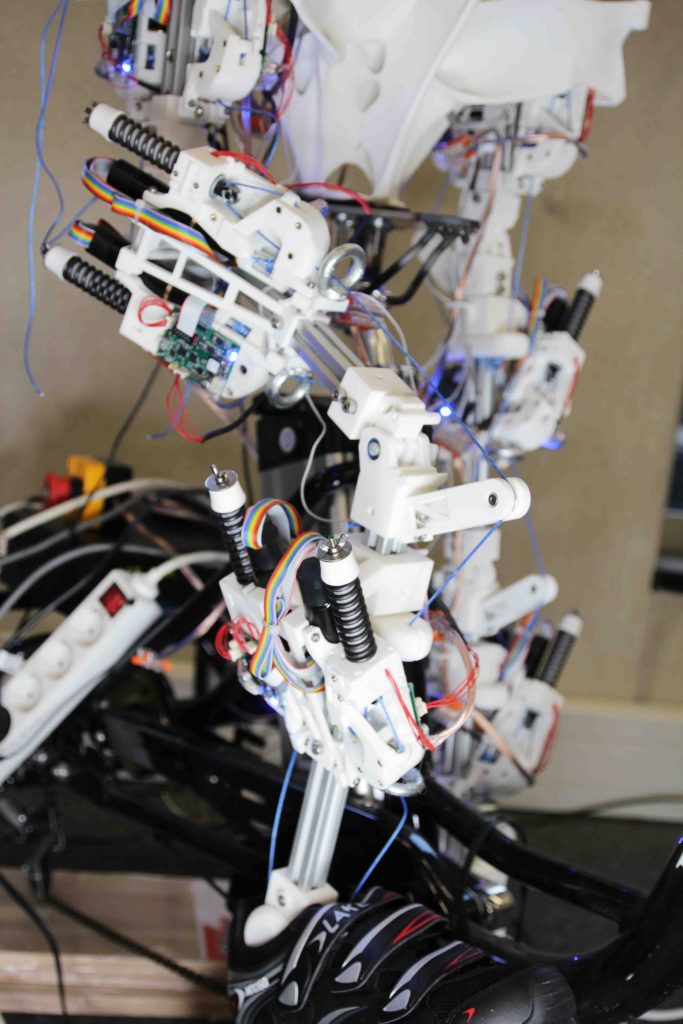

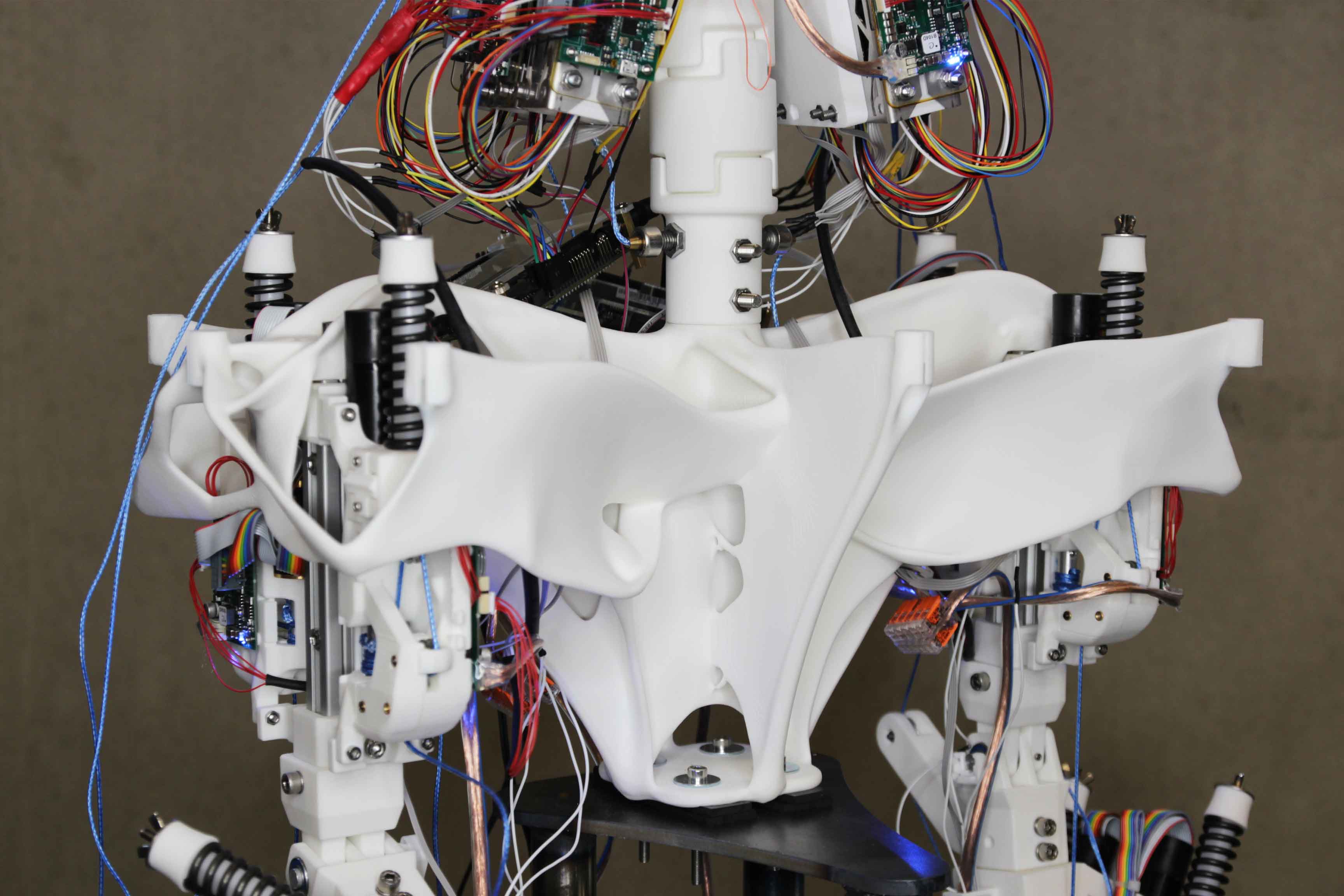

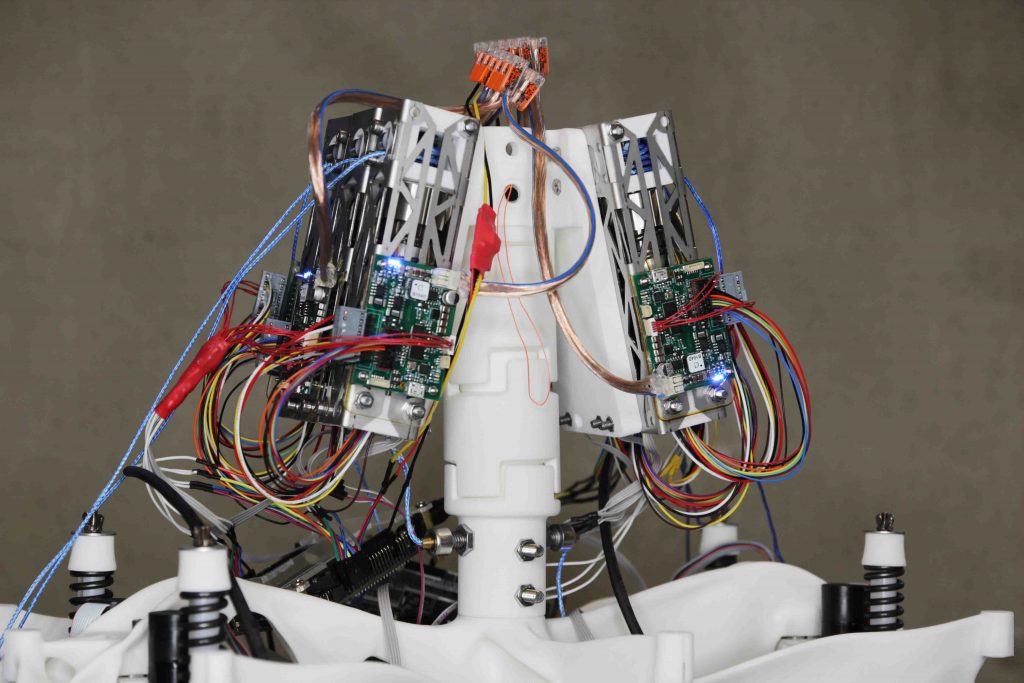

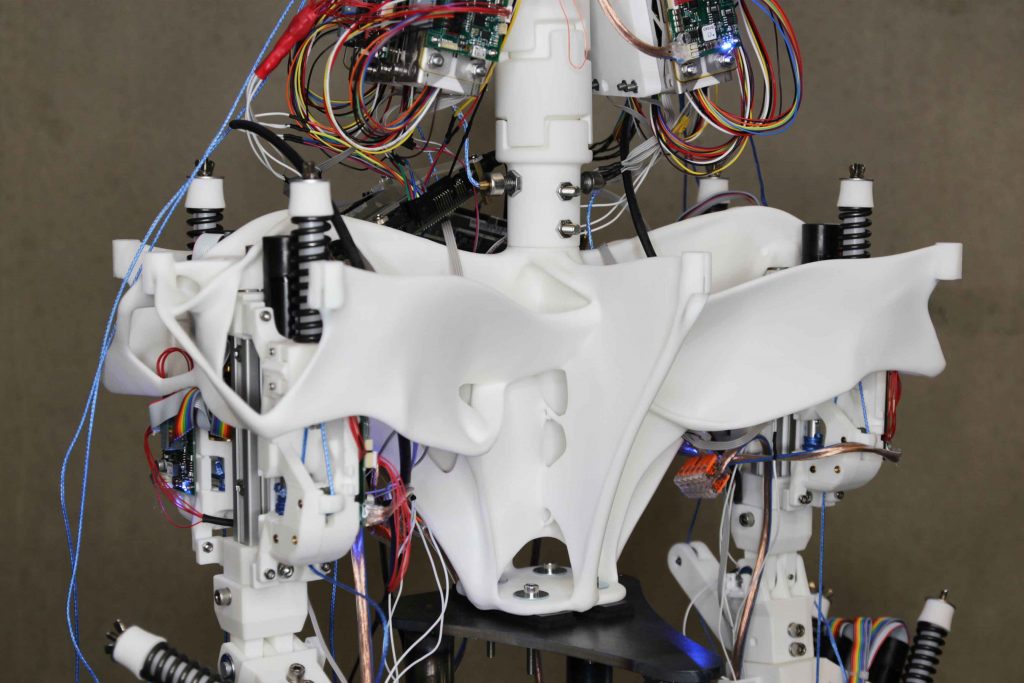

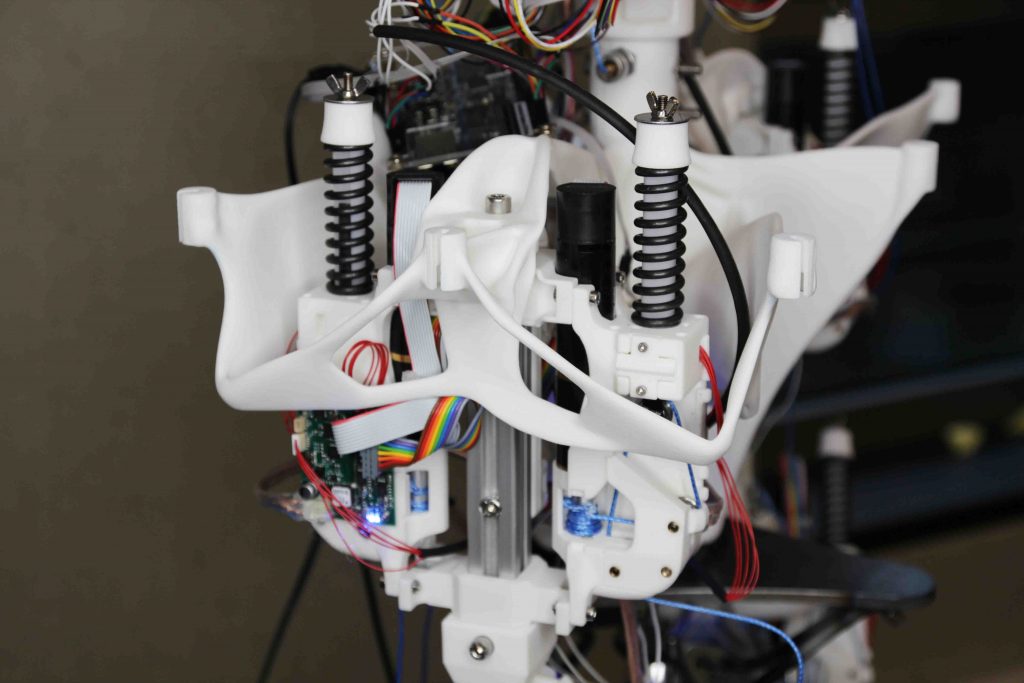

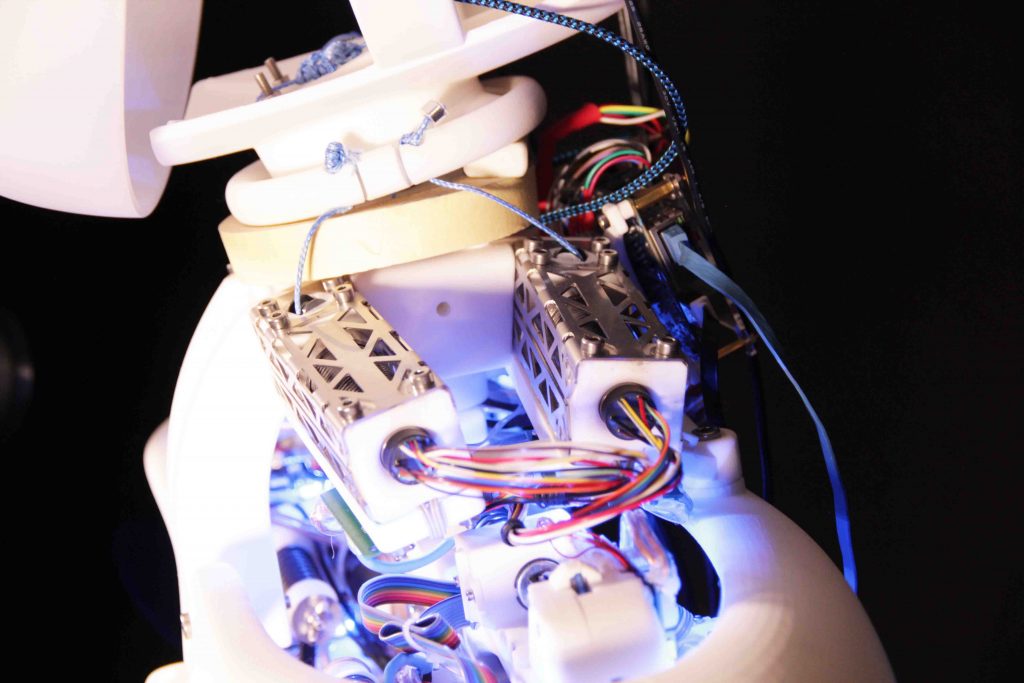

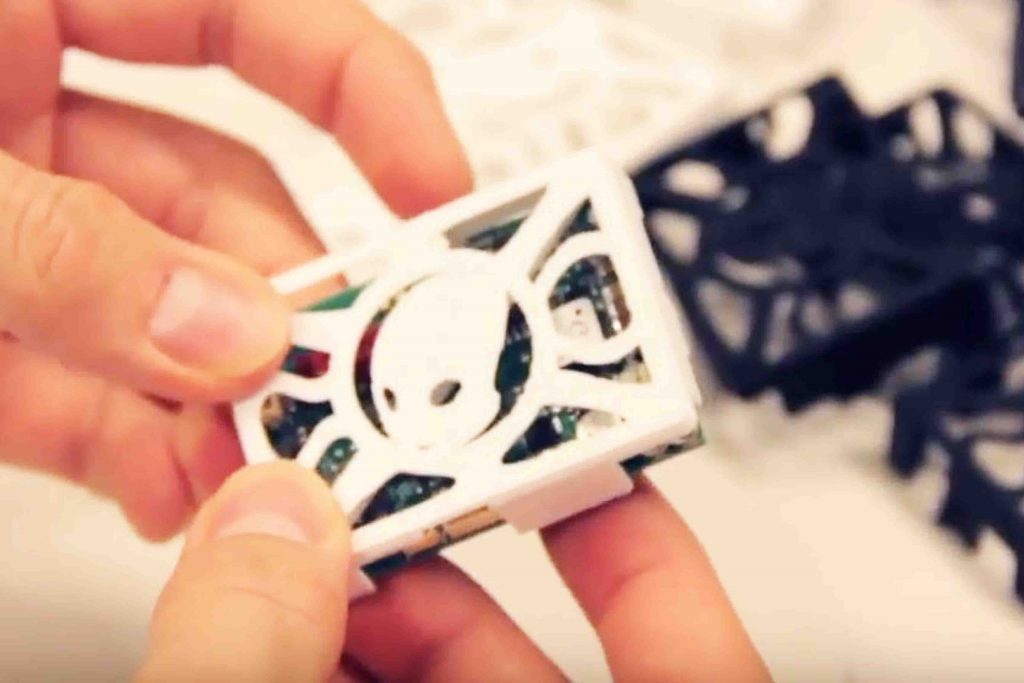

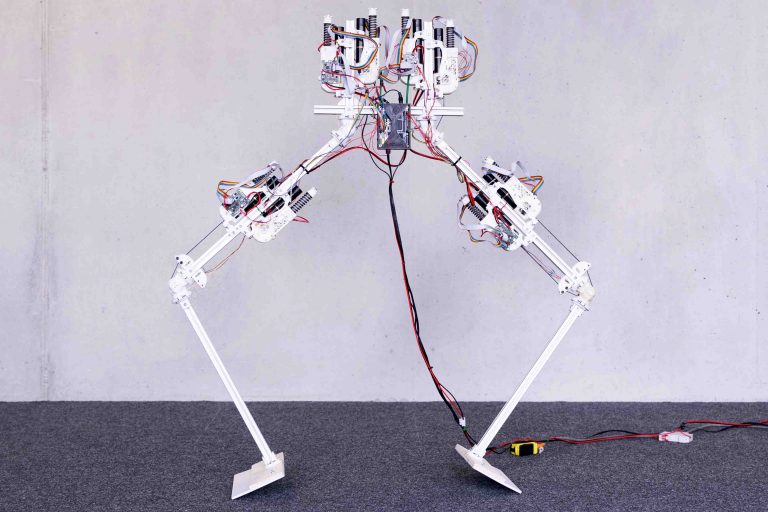

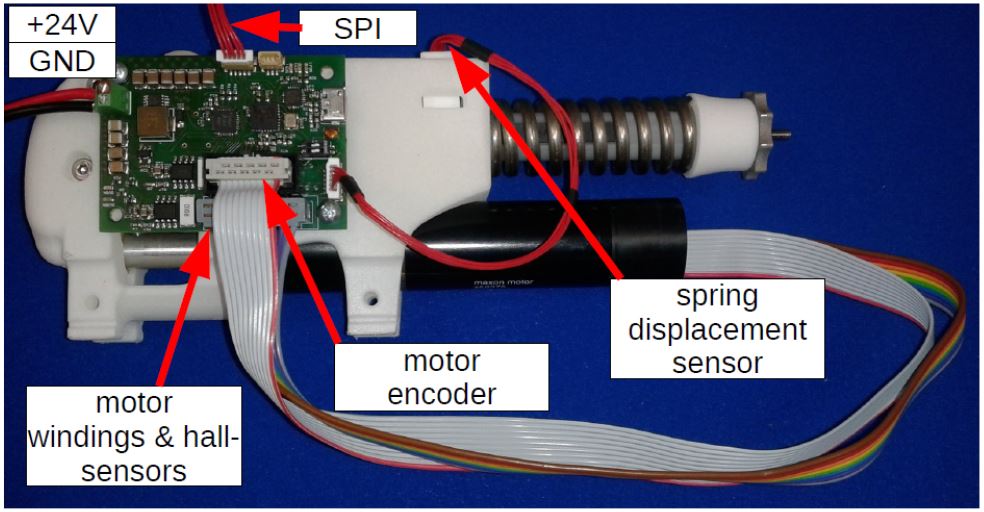

MECHATRONICS

Using generative design and 3d printing, mechatronics creates the bodies of our robots.

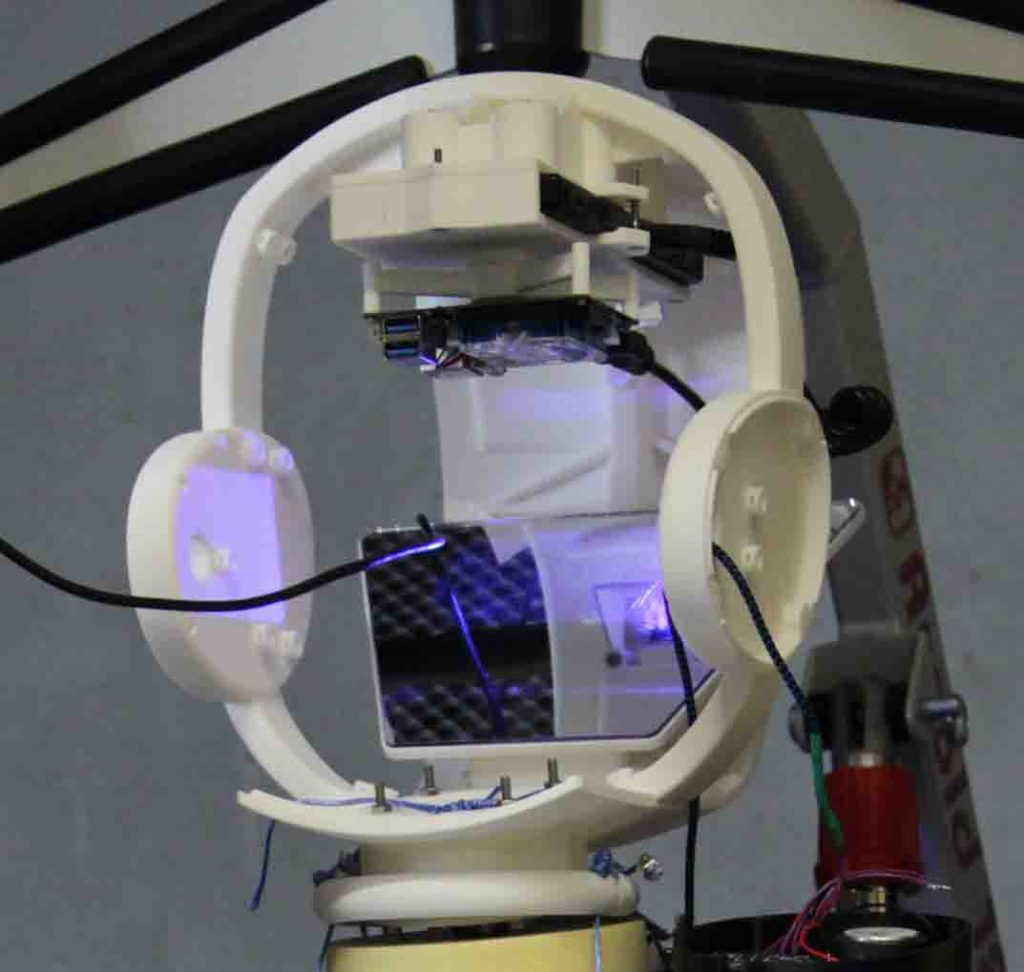

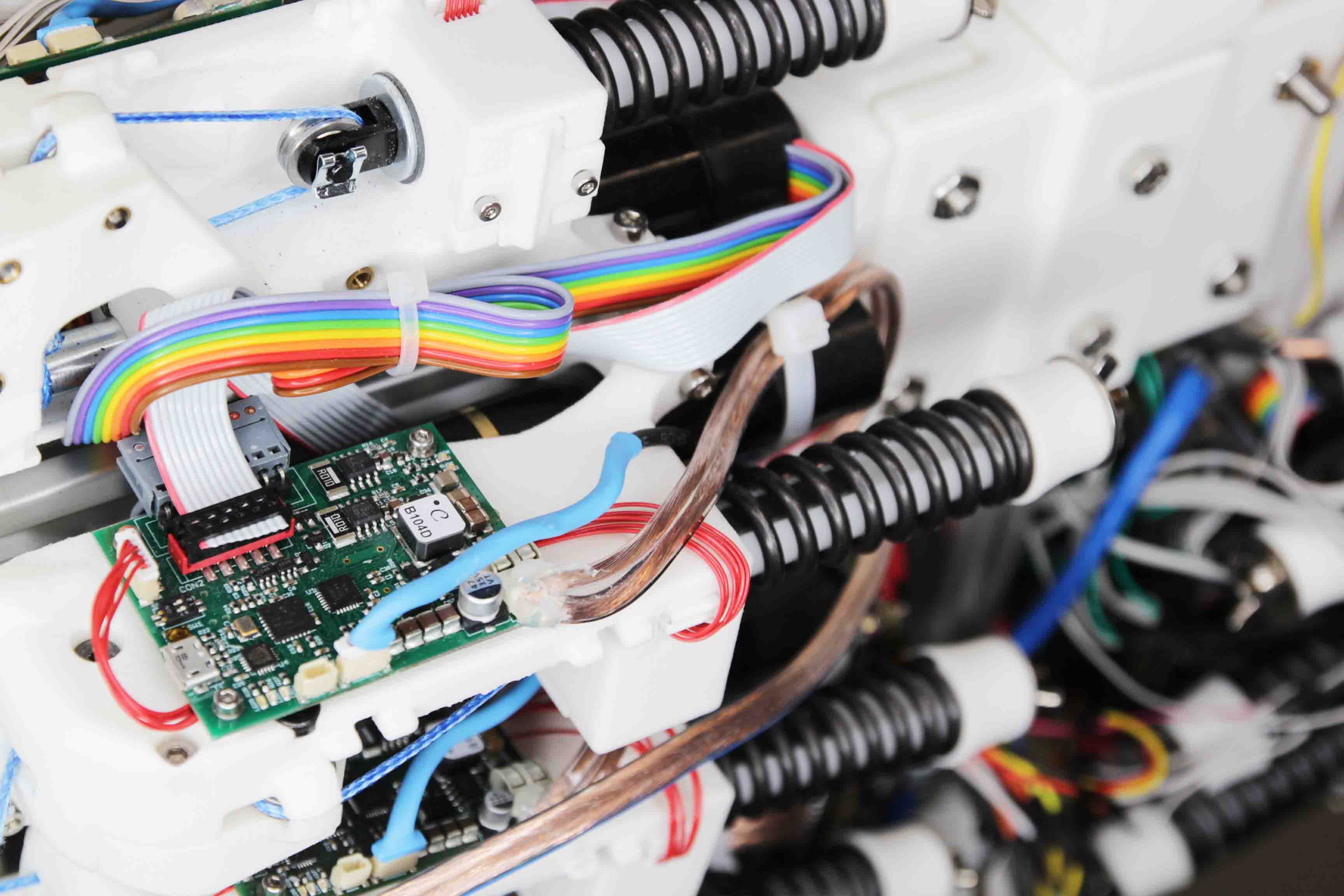

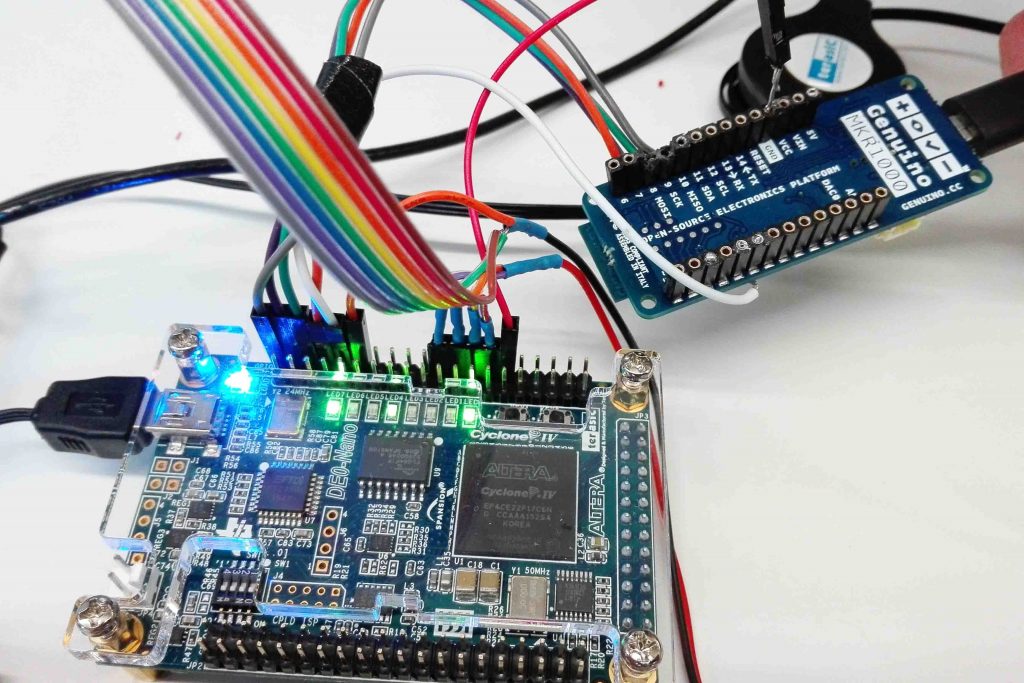

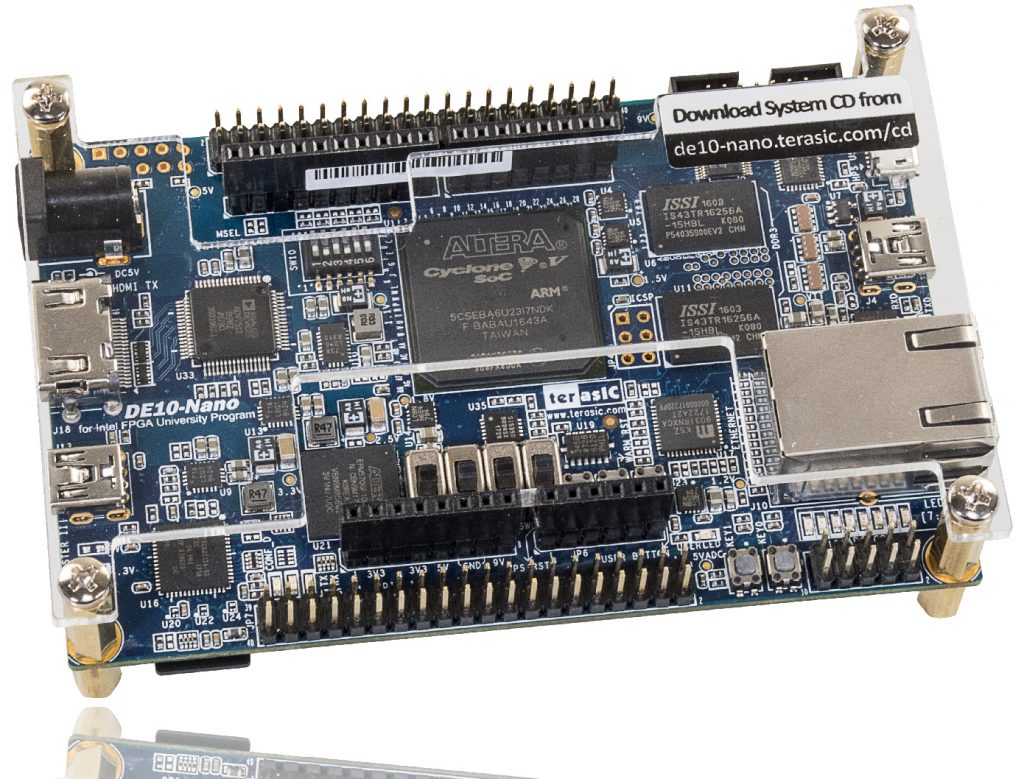

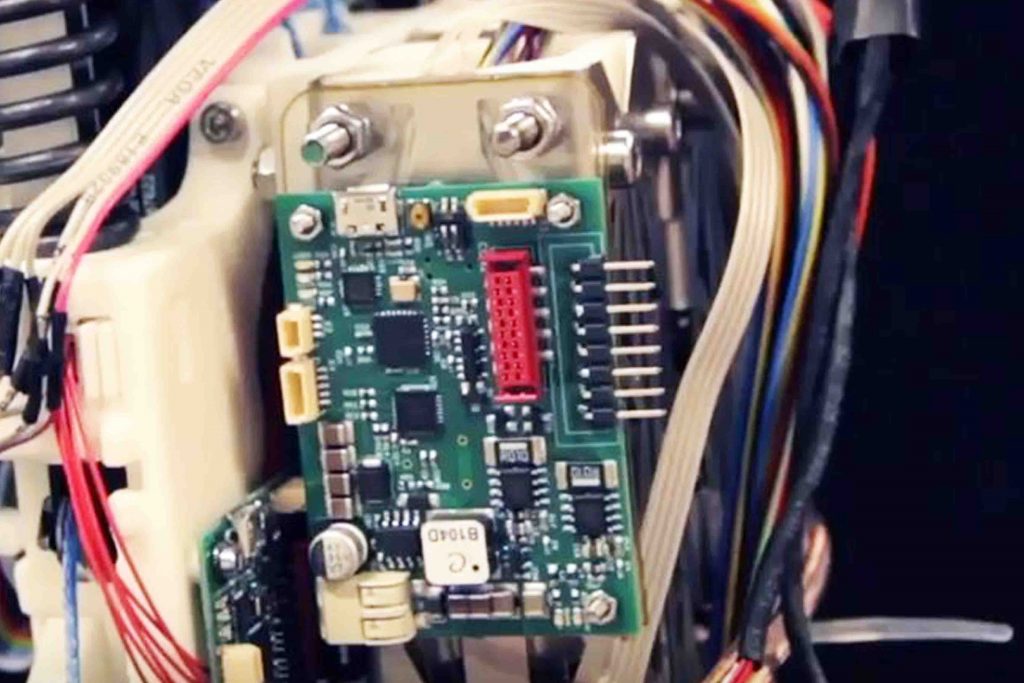

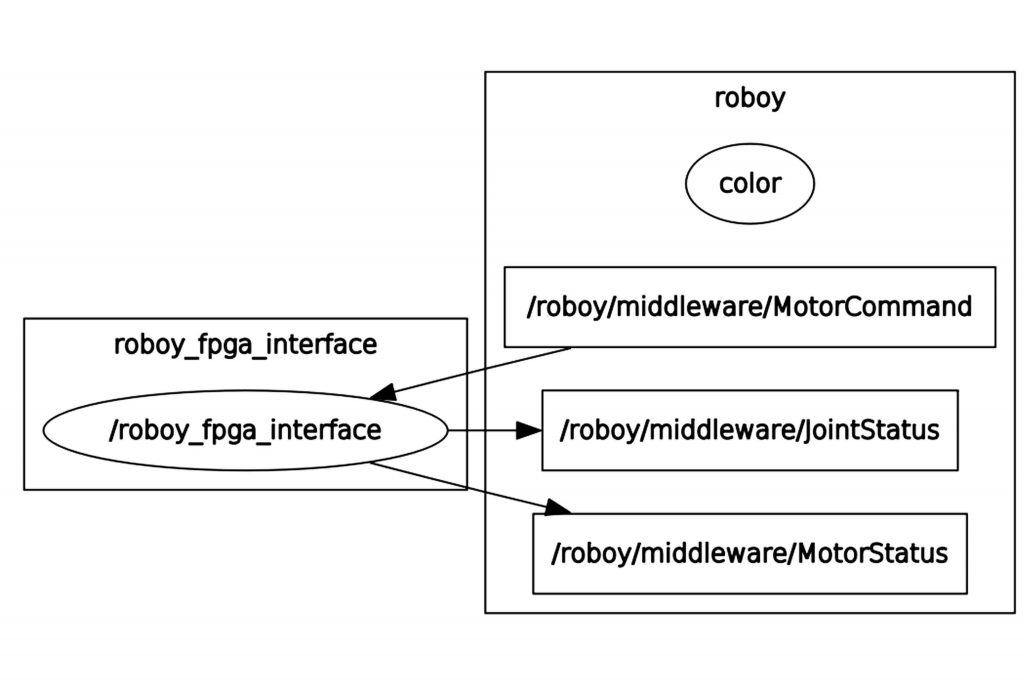

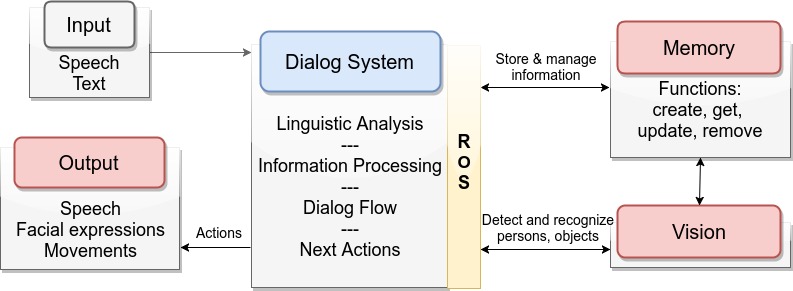

MIDDLEWARE

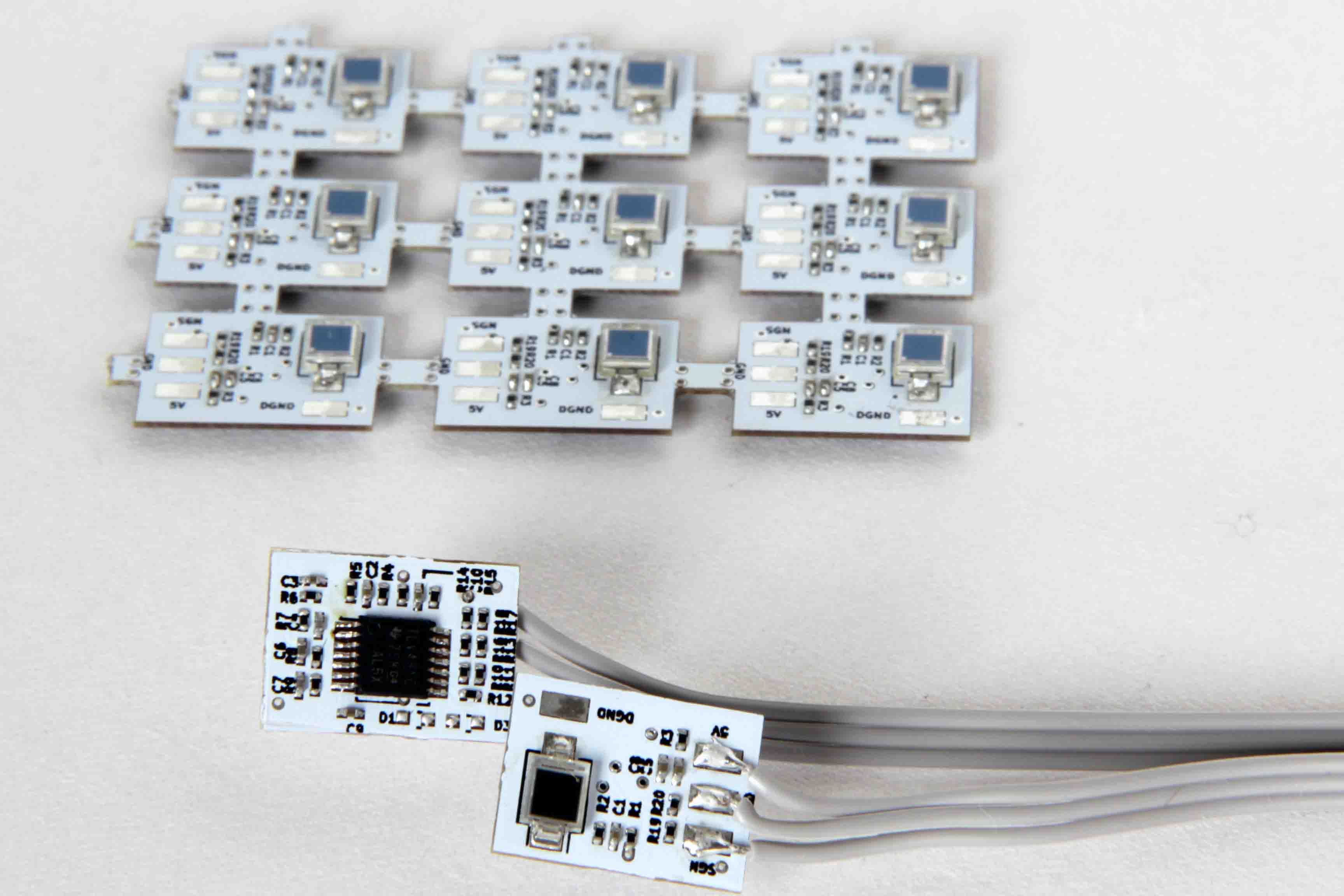

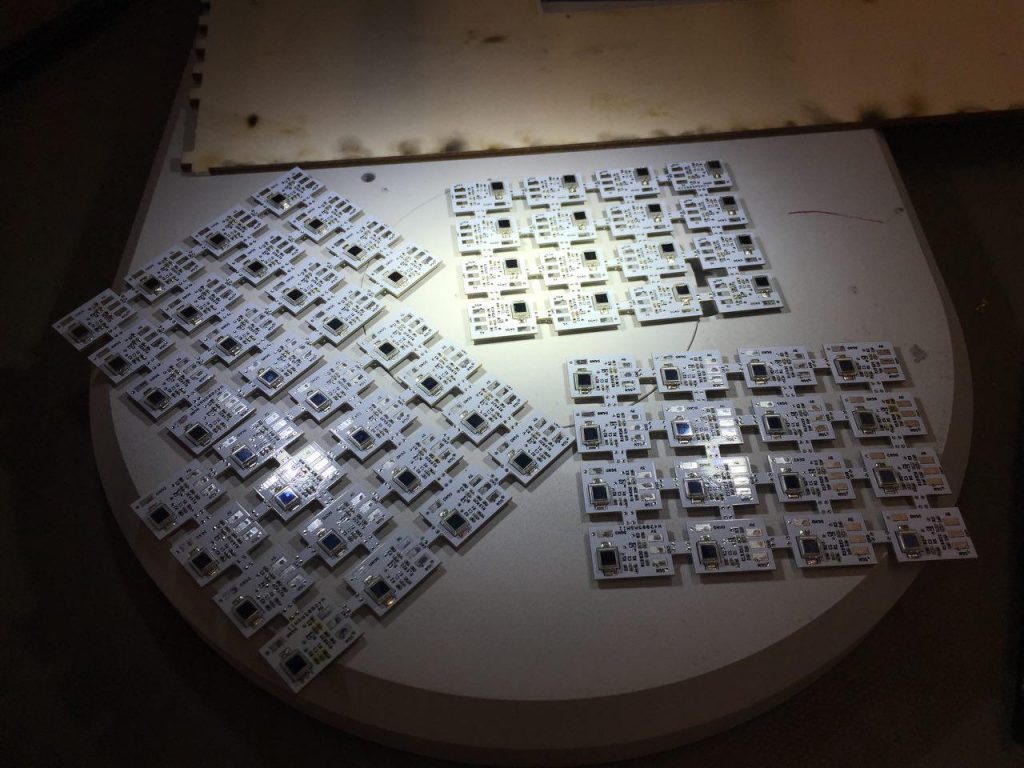

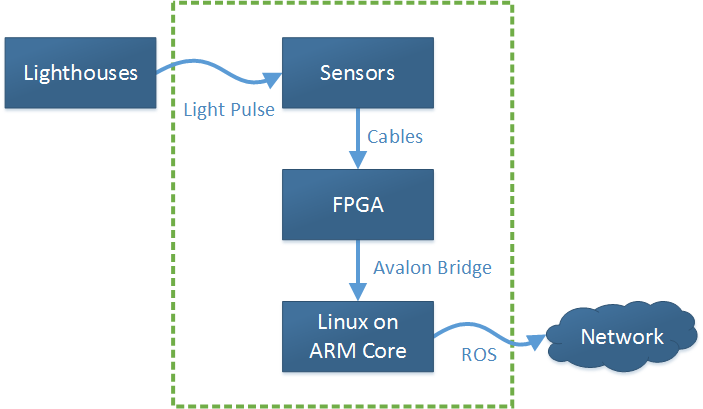

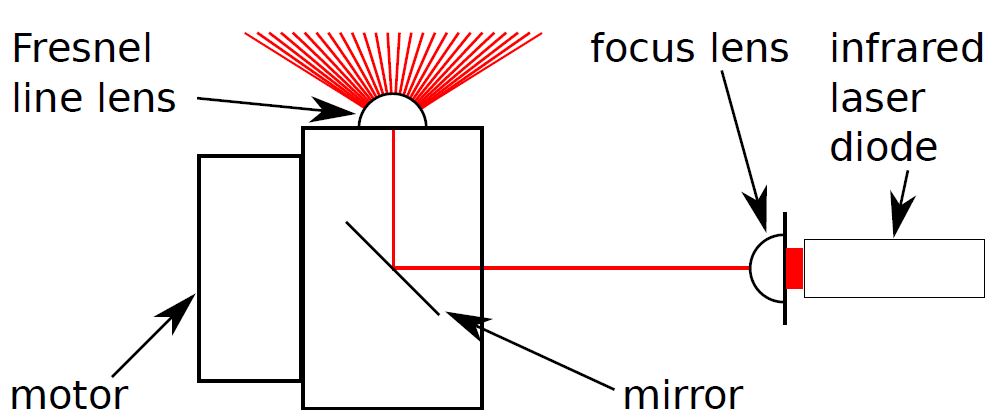

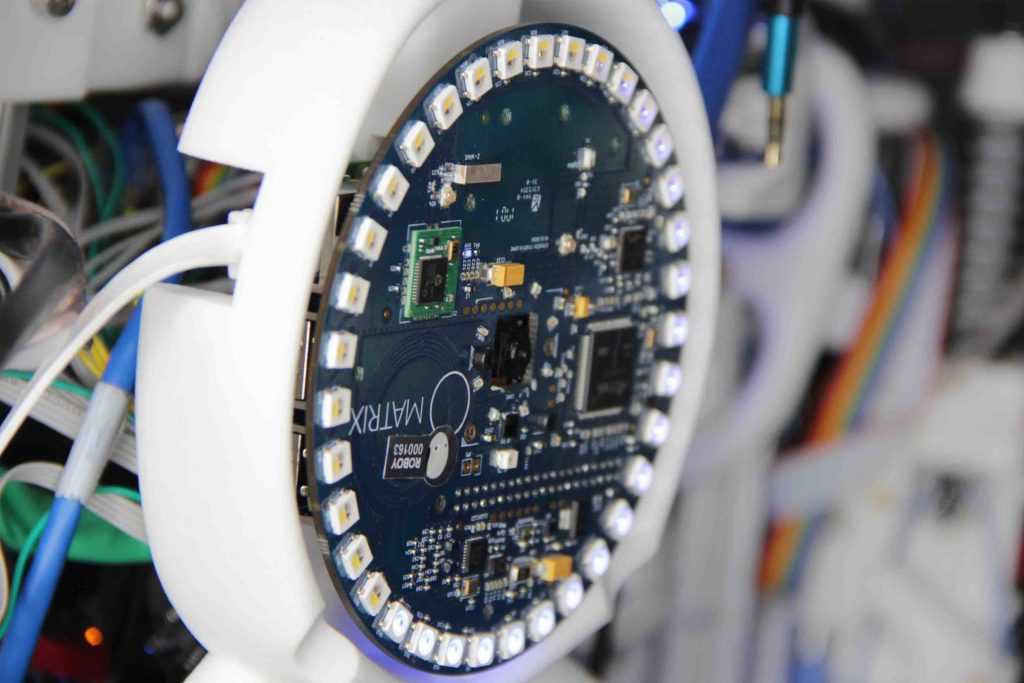

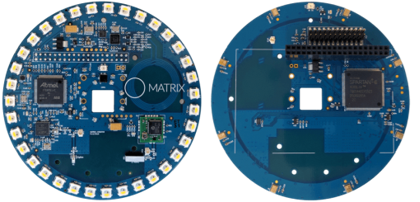

FPGA based low-level control with a PID at 2500 Hz of almost 50 motors – accessible from ROS. Also, reverse engineering Vive tracking and more.

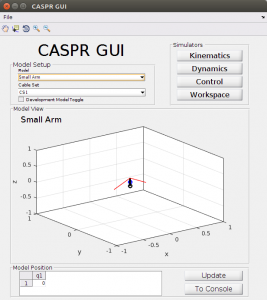

Control

Building on top of collaborations with different chairs, enabling joint-space control of musculoskeletal robots. Also spiking and classical neural networks to tame the muscles.

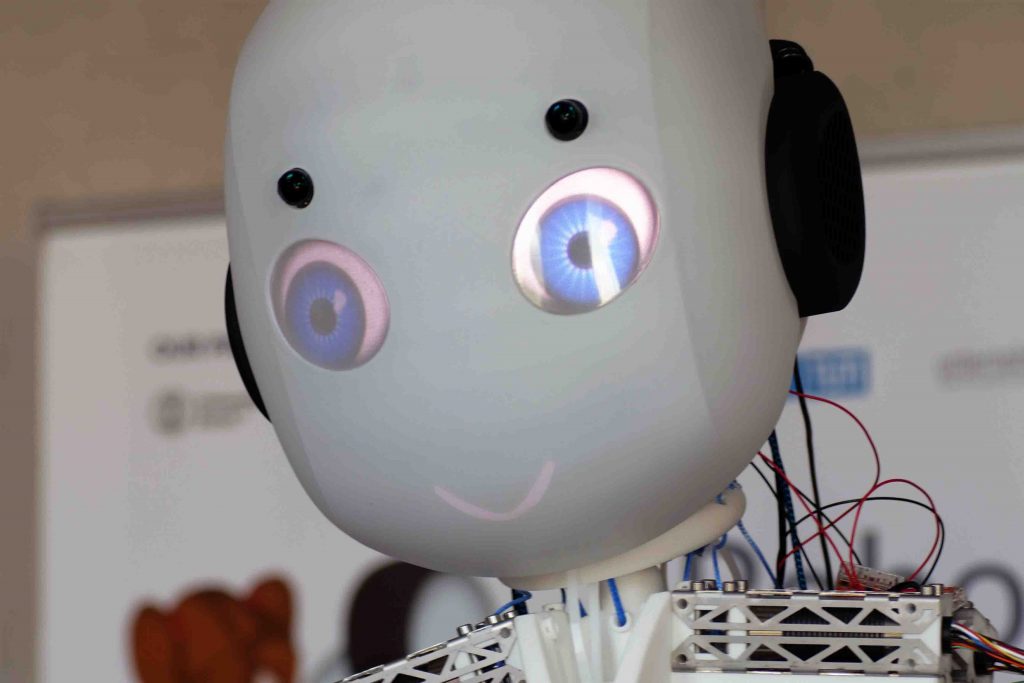

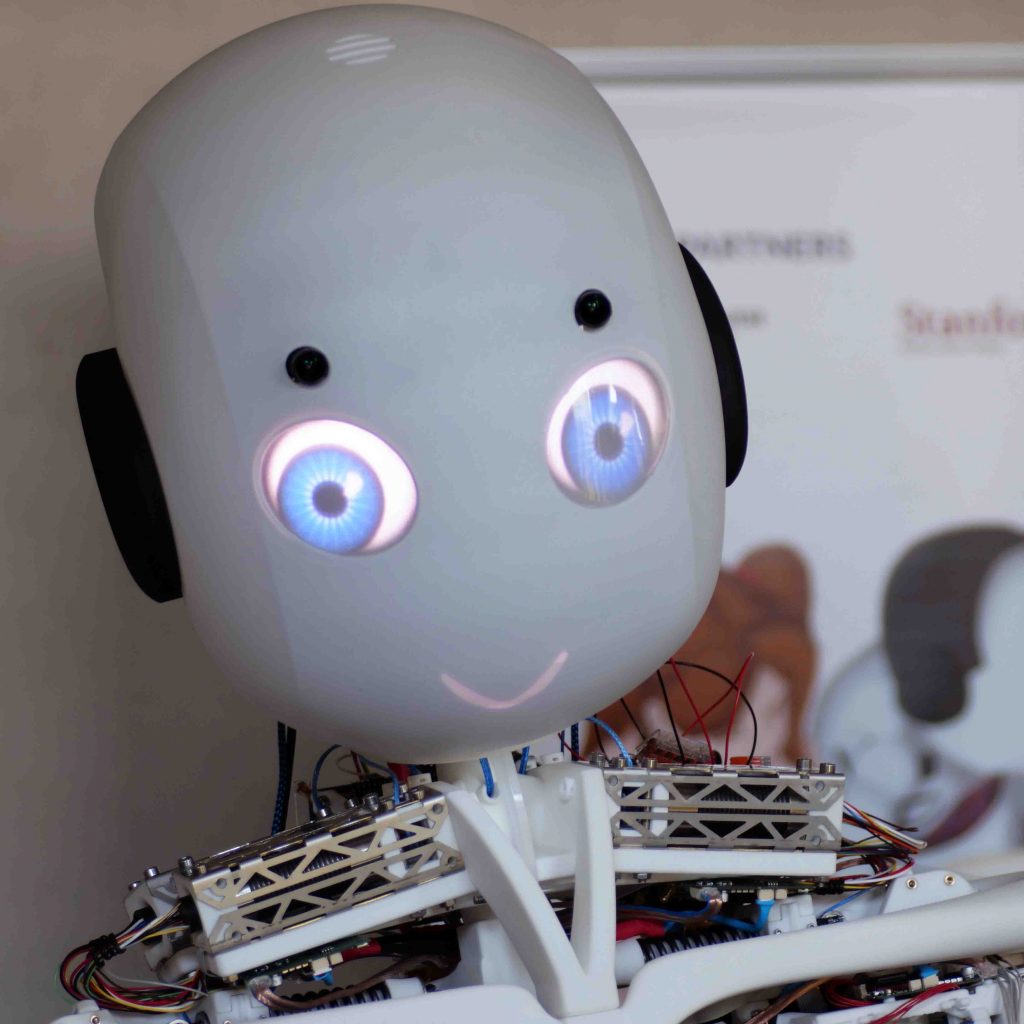

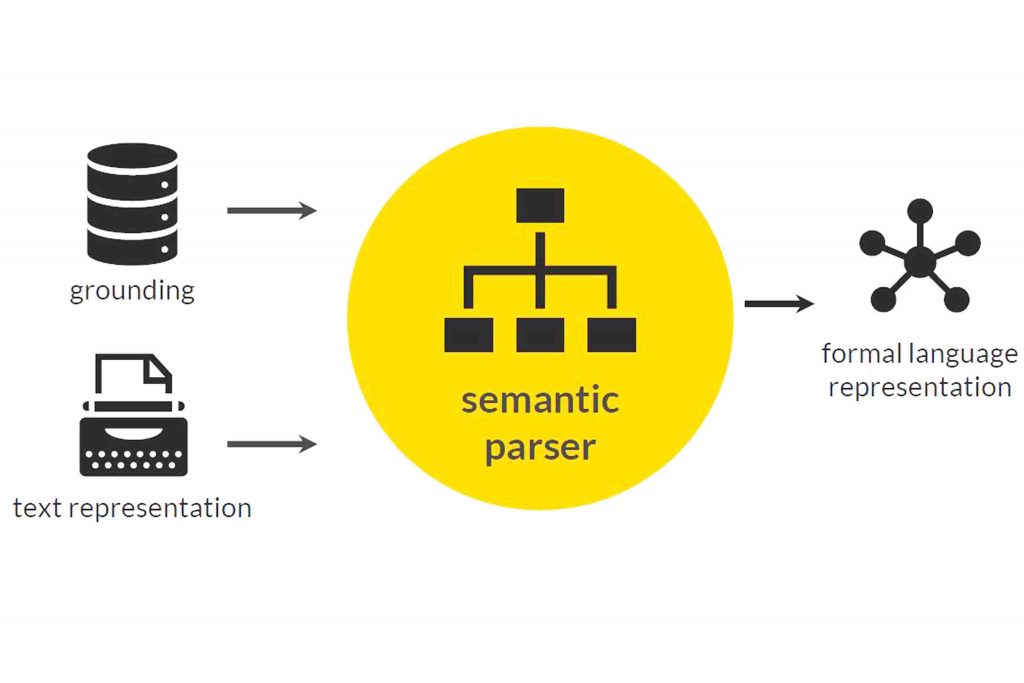

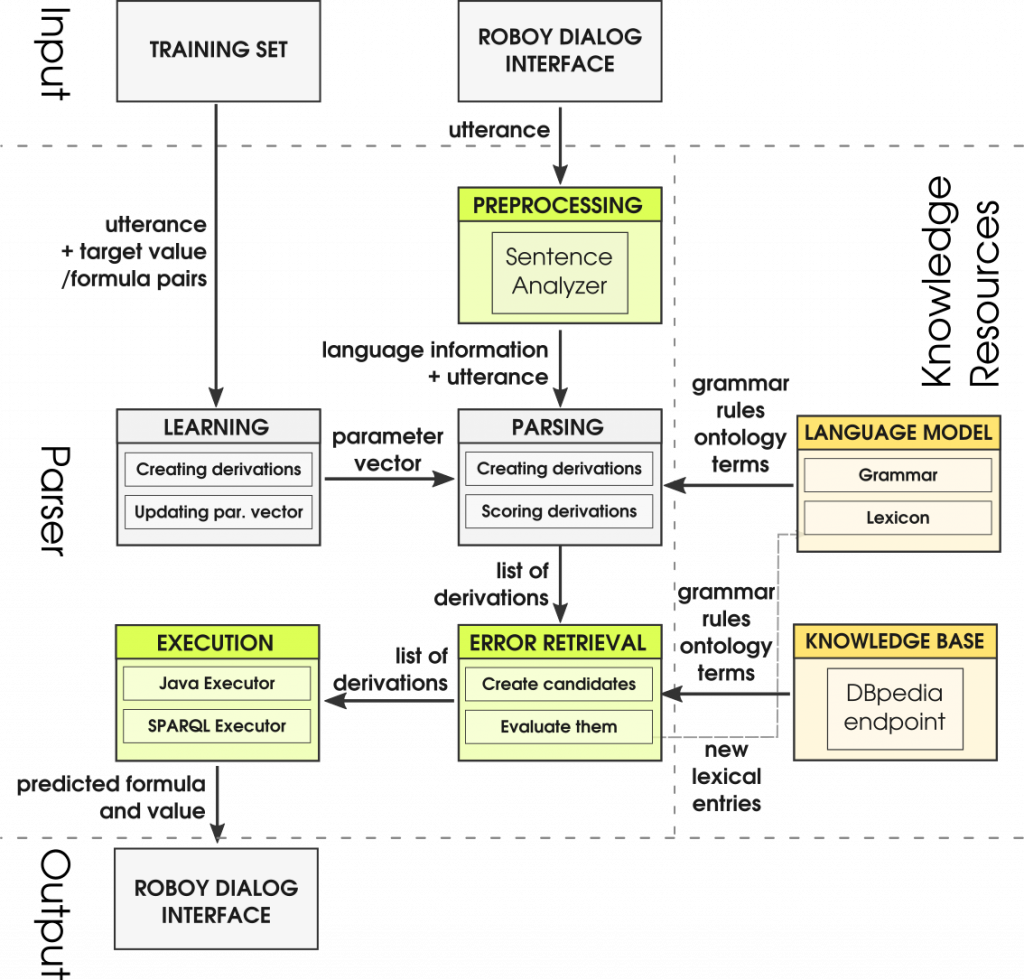

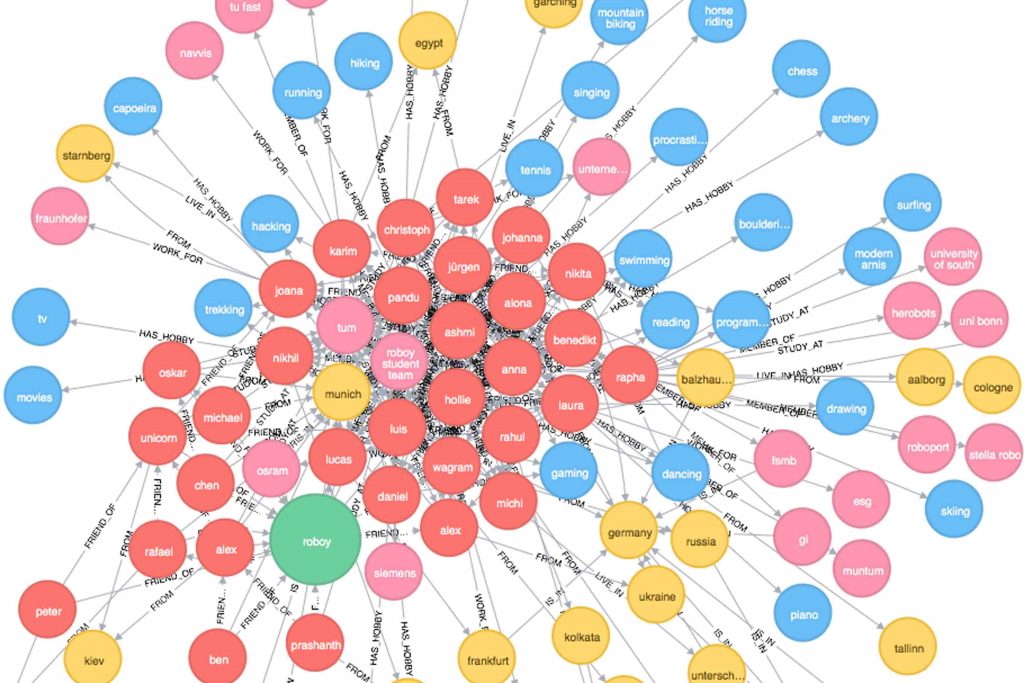

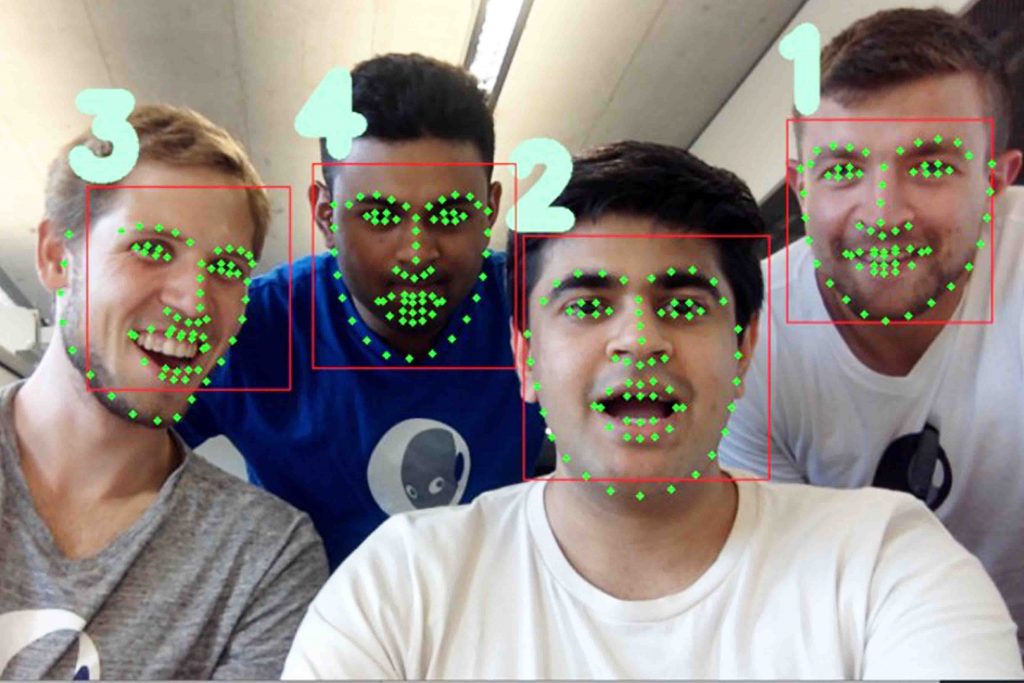

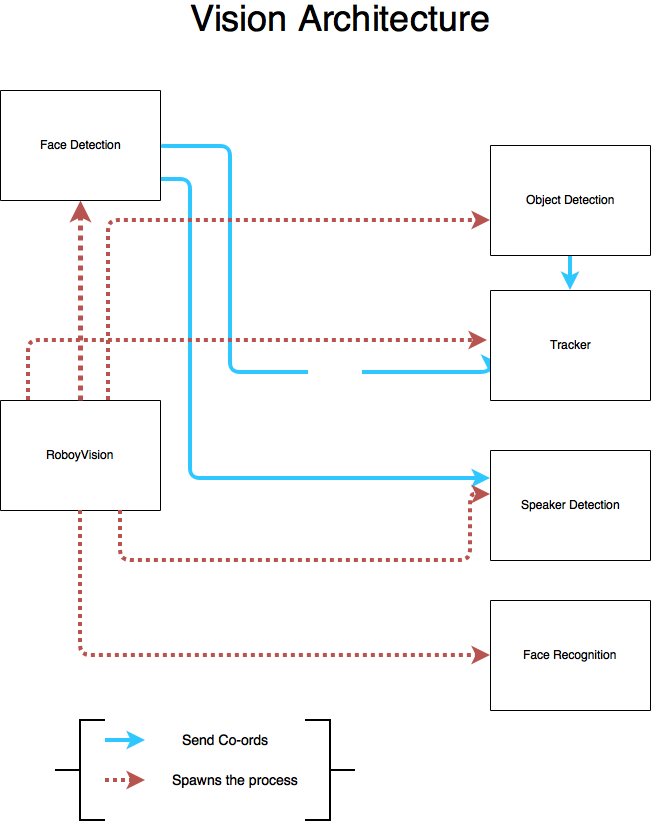

COGNITION

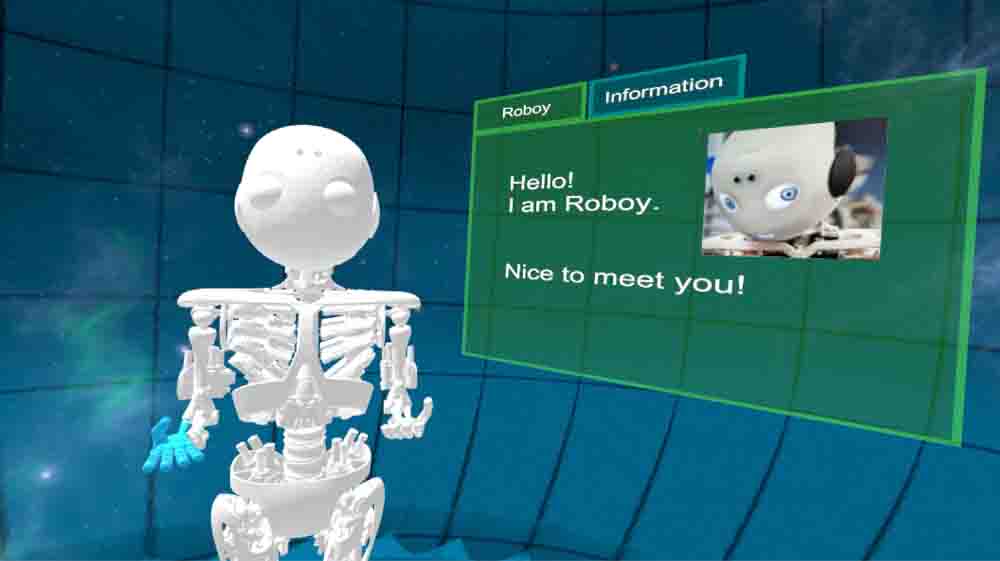

A robot without a brain is just a body! Cognition makes roboy fun, interesting and likeable. Also where most Deep neural networks live.

GROWTH

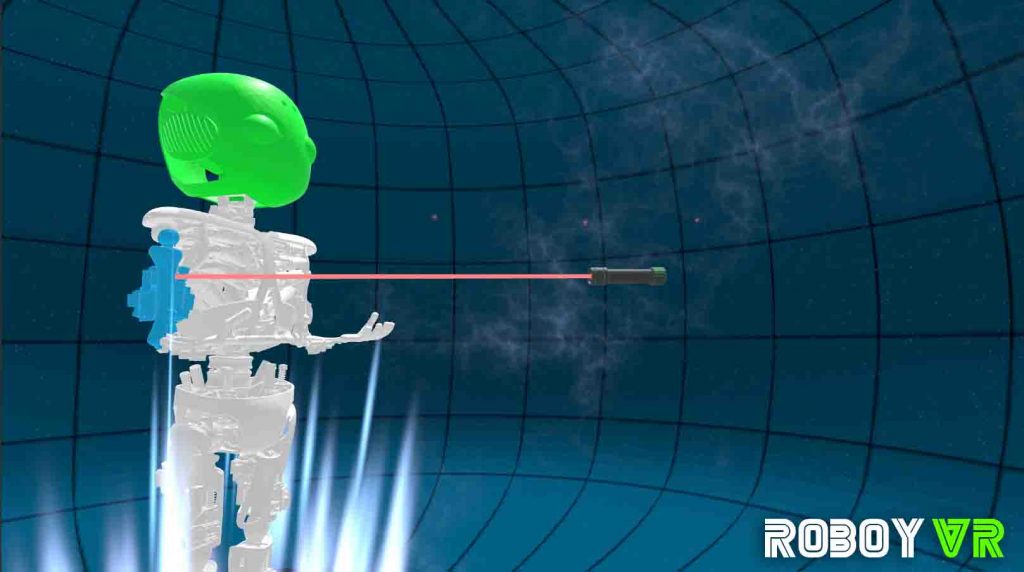

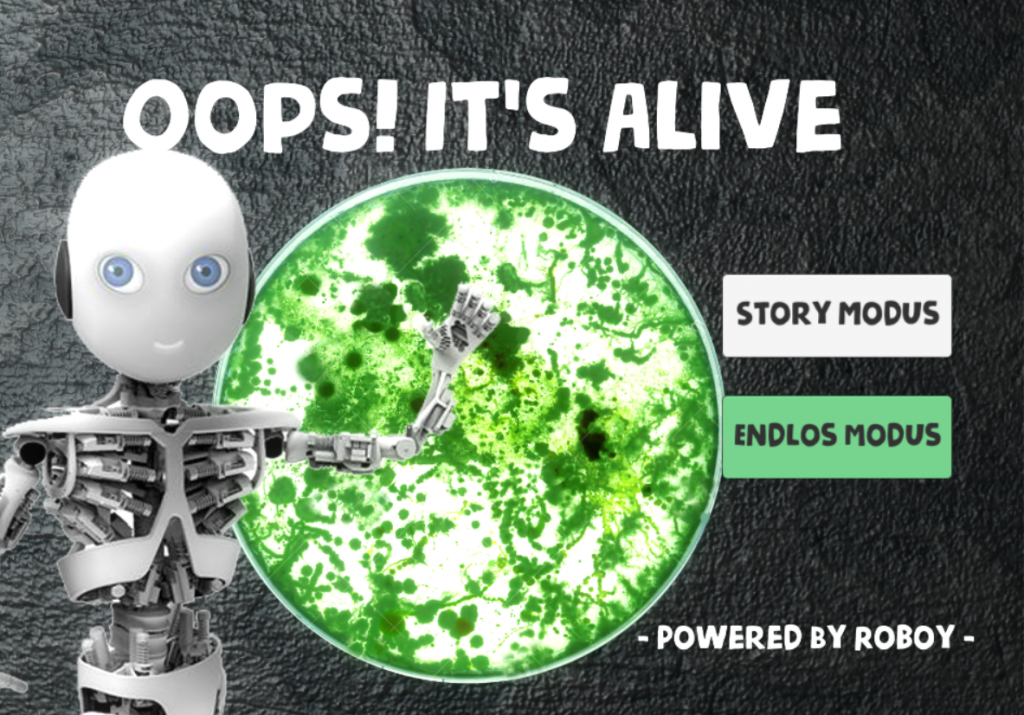

What would you do, if you had a robot? Correct! Play with it. VR, AR, Simulation & Games based on and with Roboy!